How to Set Up NGINX Load Balancing: A Step-by-Step Guide

In the last article, I talked about load balancing in the context of Kubernetes.

This time, we will talk about something simpler.

NGINX.

Da dum.

Don't get scared, it may seem daunting at first but it doesn't have to be.

That's why I wrote this article.

To provide you with a step-by-step guide on setting up load balancers using NGINX.

I also added a little snippet on the basics of load balancing and NGINX.

So without further ado, here is today's agenda:

- An overview of load balancing, its types, and its importance.

- An overview of NGINX and its use cases.

- Step-by-step guide on setting up a load balancer with NGINX.

What is Load Balancing?

Load balancing is the process of distributing network traffic over a set of resources that support an application.

Modern applications must process millions of users and return the correct data in a fast and reliable way.

There are two types of load balancers:

- Layer 4 load balancing

- Layer 7 load balancing

What the hell do you mean by layers? Layers of cake?

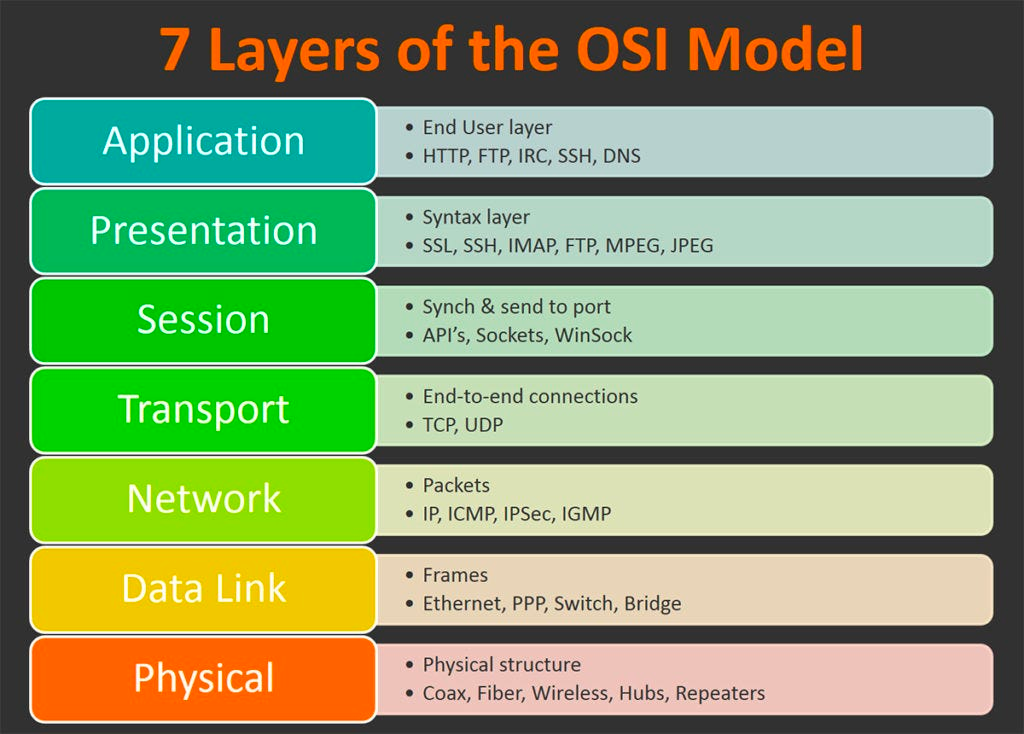

No, I'm talking about the layers of the OSI (Open Systems Interconnection) Model.

It describes the seven layers that computer systems use to communicate over a network.

So the first layer is the physical layer, which is the layer responsible for handling sending bits over a network.

While the last year which is the application layer is where your client (anything that makes an HTTP request) lies.

Now that we know what the OSI Model is and how it looks, let's take a look at the differences between load balancing in layer 4 vs layer 7.

Layer 4 Load Balancing

Layer 4 load balancing, also called network load balancing is load balancing done in the network layer (TCP/UDP)

Network balancing is considered the fastest because it does so by IP addresses and ports.

But this comes with its downsides. The main one is that because we only have access to TCP/UDP packets, we can't make any complex routings.

Another benefit of network load balancers is that they are cheaper and less complex than layer 7 load balancers.

If you want something simple then I'd say go for a network load balancer. If not, then consider layer 7 load balancing.

Layer 7 Load balancing

Layer 7 load balancer or application load balancer makes routing decisions at the application layer (HTTP/HTTPS).

As it has access to the HTTP request, it has a lot of flexibility. You can make it so that a specific route goes to a specific server.

The downside of this approach is that it's slower and more expensive than network-level load balancing.

If you want to know more about the differences between layer 4 and layer 7 load balancers, I would highly recommend this video by Hussein Nasser.

Benefits of Load Balancing

- Improved performance – Load balancing ensures that network traffic is evenly distributed between the available resources which improve performance.

- Scalability – Load balancing allows you to scale horizontally, meaning that instead of getting more powerful servers, you can get more servers.

- High availability – As said above, load balancing allows us to vertically scale which means we have multiple servers. If one server fails then the load balancer will detect that and traffic can be redirected to other working servers.

- Better resource utilization – Load balancing helps to optimize resource utilization by distributing traffic evenly across multiple servers or resources. This ensures that each server or resource is used efficiently, helping to reduce costs and maximize performance.

NGINX Overview

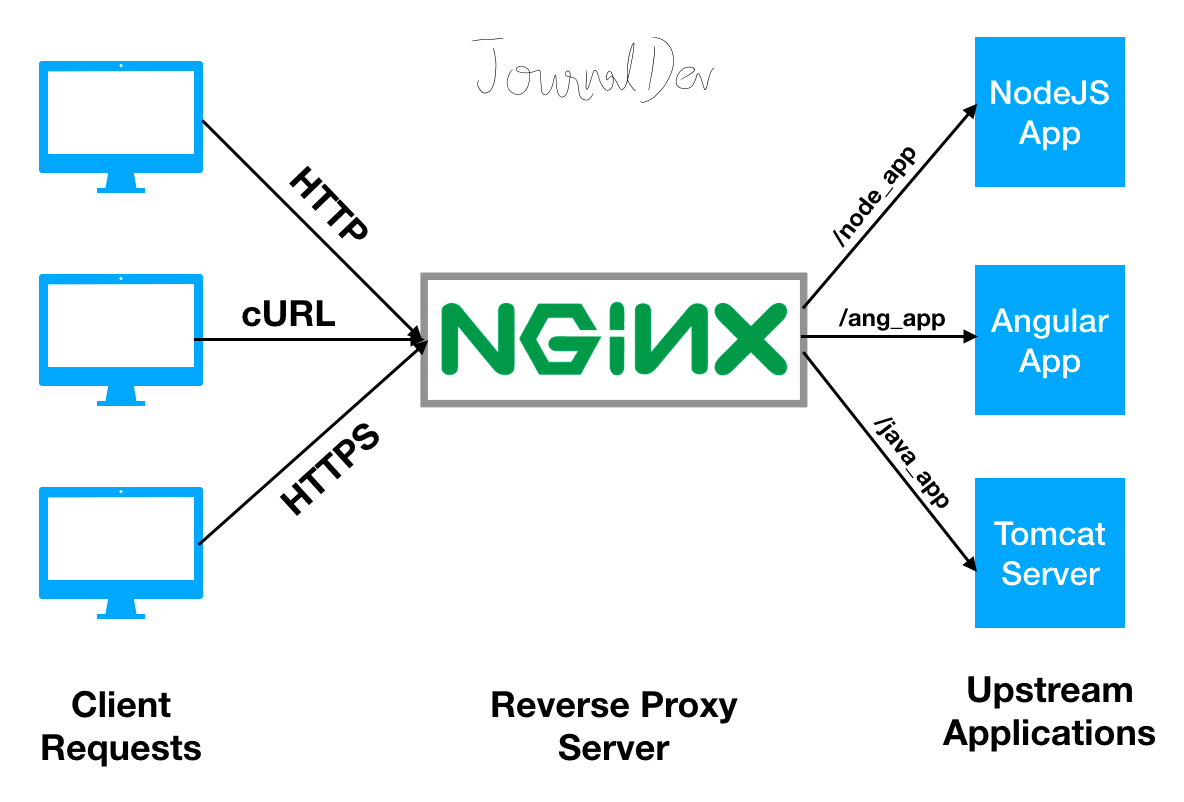

Nginx (pronounced "engine-x") is a popular open-source web server software that can also act as a reverse proxy, load balancer, and HTTP cache.

It was created by a guy named Igor Sysoev in 2004. It is the most popular web server in the world, particularly for serving high-traffic websites and applications.

Nginx is known for its high performance, scalability, and reliability, and is often used in conjunction with other software like PHP, Python, and Ruby to serve dynamic content. It is also frequently used to serve static content like HTML, CSS, and JavaScript files.

In addition to its web serving capabilities, Nginx can also be used to perform other functions like SSL termination, request routing, and rate limiting. Its flexibility and versatility have made it a popular choice for developers and system administrators alike.

Setting Up Nginx Load Balancing

For the following examples, we will load balance a simple express app that is spun up on four containers.

Here is the express app. It's a simple app that exposes a single endpoint that returns the text "I am an endpoint".

const express = require("express");

const app = express()

app.get("/", (req, res) => {

res.send("I am an endpoint");

})

app.listen(7777, () => {

console.log("listening on port 7777")

})

Now that we have the express app working, here is the corresponding Dockerfile:

FROM node:16

# Create app directory

WORKDIR /usr/src/app

# Install app dependencies

# A wildcard is used to ensure both package.json AND package-lock.json are copied

# where available (npm@5+)

COPY package*.json ./

RUN npm install

# If you are building your code for production

# RUN npm ci --omit=dev

# Bundle app source

COPY . .

EXPOSE 7777

CMD [ "npm", "run", "start" ]

We then run 4 instances of the image in the following ports:

- 1111

- 2222

- 3333

- 4444

docker run -p 1111:7777 -d express-app

docker run -p 2222:7777 -d express-app

docker run -p 3333:7777 -d express-app

docker run -p 4444:7777 -d express-appBasic Round Robin Load Balancing

For this example, we will use round-robin as the load-balancing algorithm.

Round robin basically means that Nginx will route requests sequentially.

For example, request 1 will go to server 1, request 2 will go to server 2, and it will repeat with all 4.

This is how the Nginx config will look like:

http {

upstream backendserver {

server 127.0.0.1:1111;

server 127.0.0.1:2222;

server 127.0.0.1:3333;

server 127.0.0.1:4444;

}

server {

listen 8080;

location / {

proxy_pass http://backendserver/;

}

}

}

events {

}

We first describe the upstream backendserver which is basically a list of our containers. Once we have that, we can then add this line that will tell NGINX to use our backendservers.

proxy_pass http://backendserver/;

Choosing Load Balancing Method

The previous example used the default round-robin method.

But NGINX supports four load-balancing methods:

- Round Robin – Requests are distributed evenly across the servers, with server weights taken into consideration. This method is used by default (there is no directive for enabling it.

- Least Connection – A request is sent to the server with the least number of active connections.

- IP Hash – The IP Hash policy uses an incoming request's source IP address as a hashing key to route non-sticky traffic to the same backend server. IP Hash ensures that requests from a particular client are always directed to the same backend server, as long as the backend server is available.

- Generic Hash – The server to which a request is sent is determined from a user-defined key which can be a text string, variable, or combination.

Conclusion

NGINX may seem a bit daunting in the beginning but I hope that with this article, it made a bit more sense.

If you have any questions feel free to leave them in the comments and I will try my best to help you.

Also, if you like this type of content, make sure to check out my other articles on similar topics.

Thanks for reading.

No spam, no sharing to third party. Only you and me.

Member discussion