The Beginner's Guide to gRPC with Examples

Nowadays most systems are distributed.

Meaning that the system is separated into multiple components that lie on different machines.

But have you ever thought about how do they communicate with each other?

How does service A use a class that lies in service B's codebase?

One way to do that would be through an HTTP request but another more popular way is to use a remote-procedural call.

This is what we will talk about today, specifically Google's Remote-Procedural Call (gRPC).

Client-Server Communication

Long ago, communication over the network was pretty simple.

We had the basic client/server communication methods which included things such as:

- Unidirectional Communication – SOAP, REST, and we can include GraphQL.

- Bidirectional Communication – Server-Sent Events (SSE) and Web Sockets.

- Many other custom protocols were made using Raw TCP or UDP such as RESP (Redis Serialization Protocol).

These protocols were applicable to a lot of use cases but were not suitable for others. Problems included:

Client Library

Any communication protocol needed a client library for the language of choice. For example, if you were communicating using SOAP and were using Java then you would need a third-party library for SOAP in Java.

Another great example would be REST, for that you would need an HTTP library. You might say, "Well, why should I care about this?".

Well if you are strictly doing client-side development it doesn't really matter to you because this is all managed in the browser.

Yes, your browser is the biggest HTTP client ever. It managed a lot of things behind the scenes, such as:

- Establishing the connection with the server.

- Negotiates which protocol to use using Application-Layer Protocol Negotiation (ALPN)

- It encrypts your data using SSL or TLS.

- It checks which version of HTTP the server support and uses the appropriate version.

The main point is that all of this is not easy to do and the only thing you do is call a fetch request and get a beautifully formatted response object.

But here comes the main problem.

What if you are not on the browser?

You might be an X-language application. You will need some sort of third-party HTTP client and you are responsible for that.

Yes, that may not be you specifically but someone out there is maintaining that third party HTTP client that you use.

But how may this be a problem?

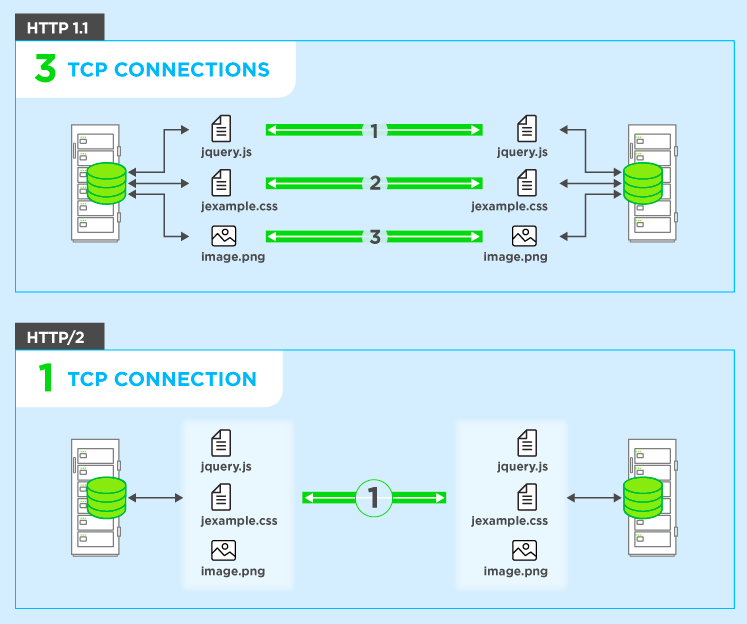

Let's imagine this scenario, you are a C# backend that uses an HTTP client that only supports HTTP 1.1. You want to update to HTTP 2 to take advantage of multiplexing. But your client library maintainer decided to ditch the whole thing and just not support HTTP 2.

So in this case, your gonna have one of the three options:

- Ditch the idea.

- Change the client library to something that supports your use case.

- Add the functionality yourself.

If you decide to go to path number 3, then you will face the other problem with client libraries.

Maintainance

Client libraries are just hard to maintain.

There is just so much stuff to do and what's worse you must support them in different languages.

Just to be clear I'm not only talking about HTTP but any communication protocol.

WebSockets needs its own client library and someone has to maintain that.

This might not be a big problem now because most client libraries are being supported by tech giants such as Google, Mozzila, or Meta (Facebook).

But this was a very relevant problem in the past.

To solve this problem, gRPC came into the picture.

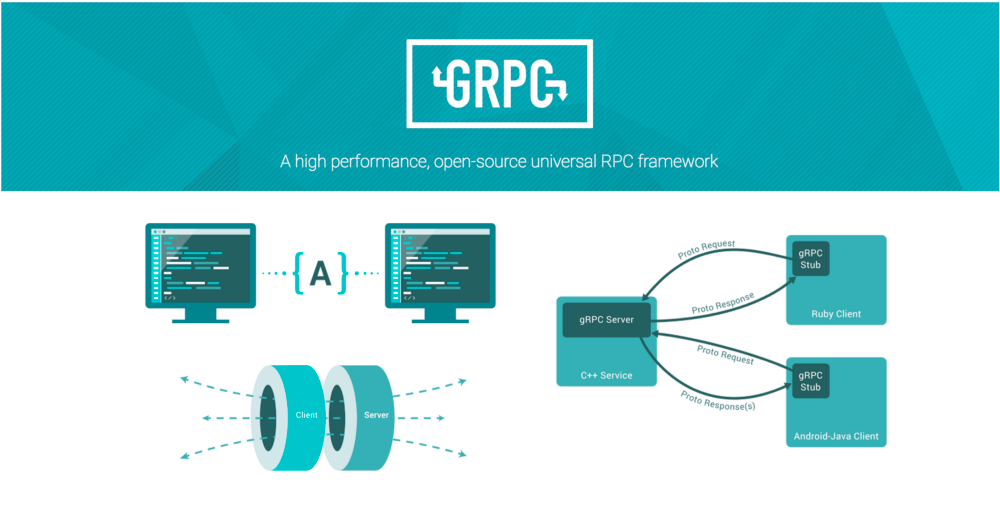

Google's Remote Procedural Calls (gRPC)

The main problem that gRPC solved was that whenever we create any new protocols, we had to develop and maintain a client library for every language that we want to support.

It does that by standardizing the whole thing.

It does that by doing three things.

It uses one client library

So Google will create and maintain the client library for all popular programming languages.

It supports all the popular programming languages but there are lots of other implementations in other languages.

Here's the official list of supported languages:

It uses HTTP 2

gRPC uses HTTP 2.0 behind the scenes. Meaning that it allows for lower latency connections that can take advantage of a single connection from client to server (which makes more efficient use of connection and can result in more efficient use of server resources).

HTTP/2 also supports bidirectional connectivity and asynchronous connectivity. So it is possible for the server to efficiently make contact with client to send messages (async response/notifications, etc..)

Keep in mind that this is all hidden implementation so at any time in the future, Google can decide to ditch HTTP 2 to some other protocol and you won't have to do a single thing. Everything by default should work as is.

Language Agnostic

Unlike REST where the payload format is JSON, in gRPC, it's Protocol Buffers or Protobufs.

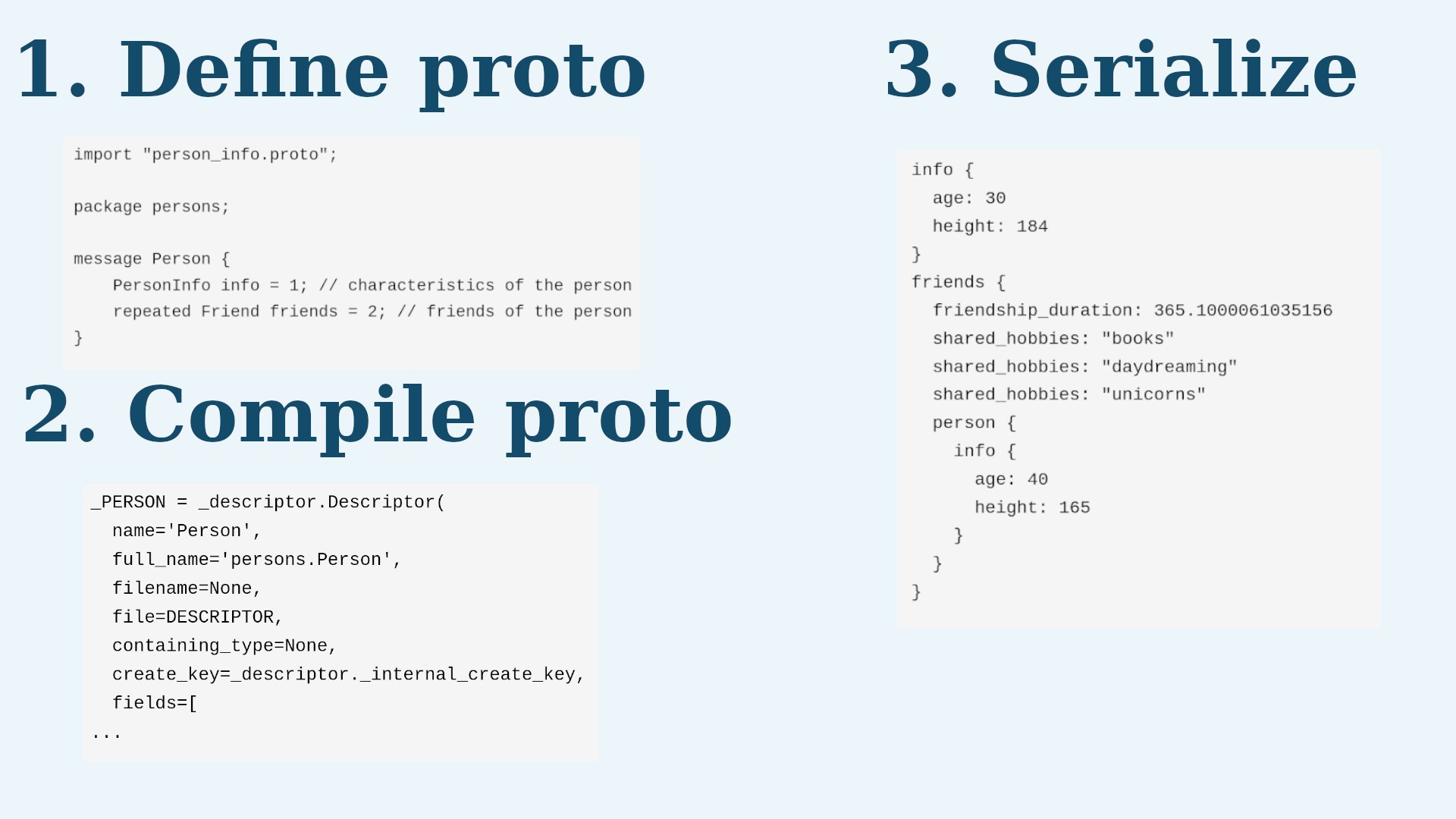

Protobuf is a language-agnostic data format used to serialize structured data. It was created at Google. So how it works basically is that you:

- Define your data in a

protofile. - Compile that to whatever language you need (Python, JavaScript, etc...)

- Then you can use the compiled file to generate the serialized Protobuf object.

Protobufs vs JSON

You might ask, "Well why does gRPC use Protobufs over JSON?".

Well, they are made for two different purposes and gRPC is more suited toward Protocol Buffers.

Let's start with JSON, which is a message format that started from a subset of JavaScript. On the other hand, Protobufs are a more than a message format, it is also a set of rules and tools to define and exchange these messages.

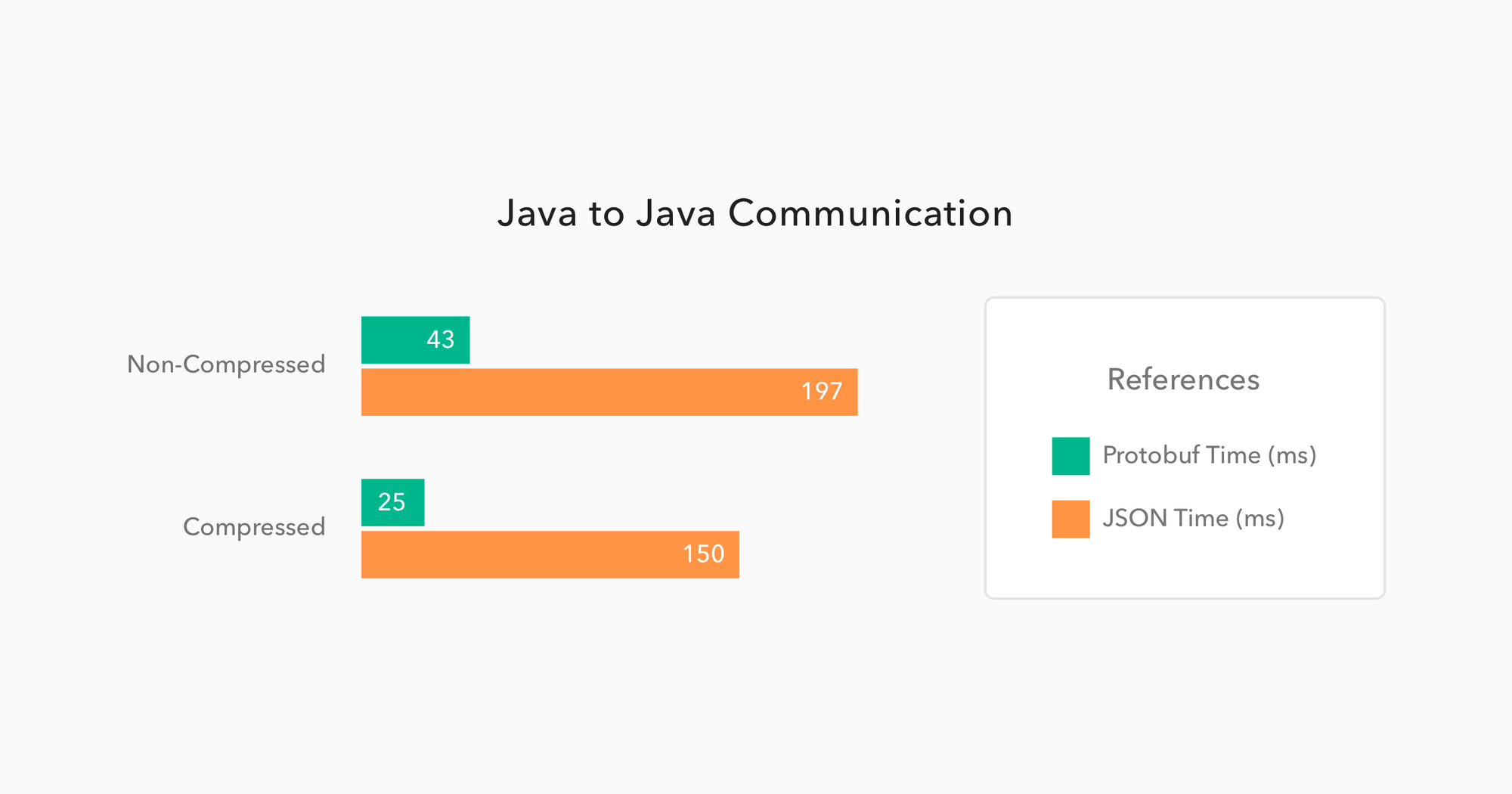

So here comes the main question, "Are Protobufs really faster than JSON?"

The answer is yes because protocol buffers are just more compact, everything is in binary format. That's why Google decided to use them for gRPC.

gRPC Modes

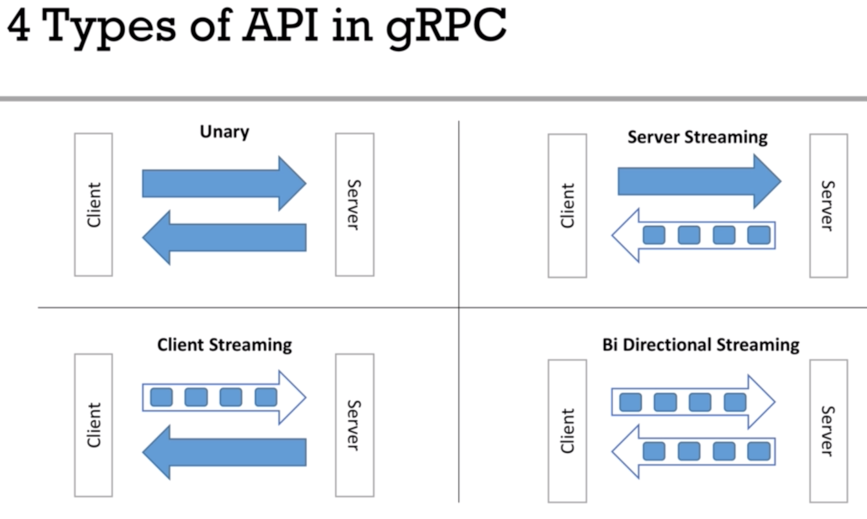

As gRPC is an alternative to your typical client/server communication, it has to replace whatever HTTP, WebSockets, SSE, and other protocols do.

It does that by having four distinct modes.

- Unary RPC – This is your typical synchronous request-response cycle where your client makes a request, waits, and then receives a response from the server.

- Server Streaming RPC – This is when the client makes a single request to the server but expects multiple responses (stream of responses) back from the server. For example in a video streaming platform, you make a single request to a page but get a stream of responses back. On the client-side, there is usually an event handler method called

onDataRecived()that gets triggered whenever the client receives new data from the server. - Client Streaming RPC – This is the complete opposite of Server Streaming, essentially the client sends a stream of data to the server. This might be used if you send a huge file, you don't want to send it all in one request so you partition them into multiple.

- Bidirectional Streaming RPC – This is basically where both the client and server can send streams of data to each other. Yes, this is essentially WebSockets. It's most notably used in online games, chat applications, and many others.

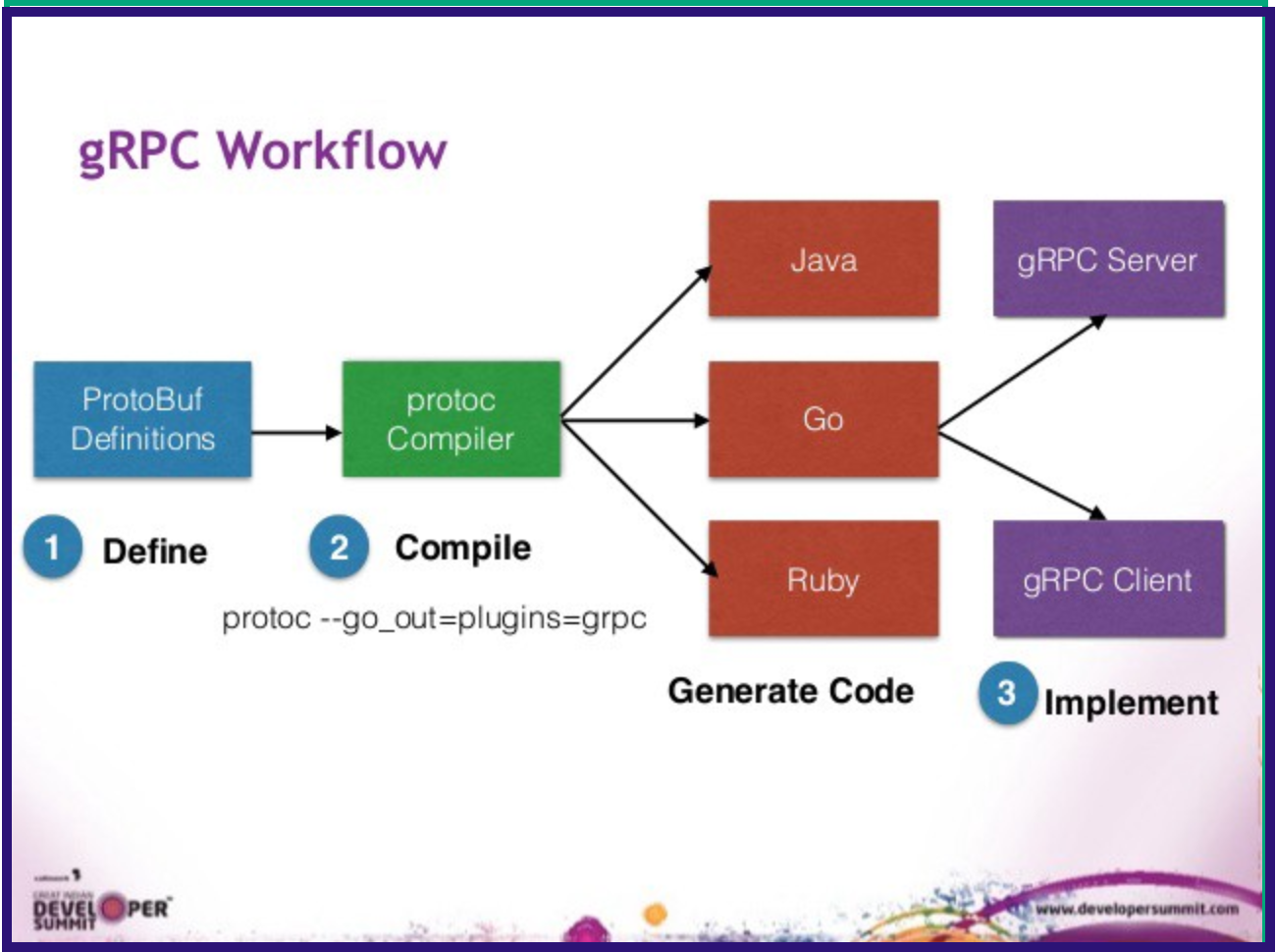

gRPC Work Flow

Before we move to the example, I want to first explain the typical four step workflow of gRPC.

- Define of the service contract for communication – The services can be defined by basic parameters and return types. So for example, I can define a service (method) called

foothat acceptsbarand returnsfoobar. - Generation of the gRPC code from the

.protofile – Special compilers generate the operative code from stored.protofiles with the appropriate classes for the desired target language (such as C++ or Java). - Implementation of the server in the chosen language.

- Creation of the client stub that calls the service – The request(s) are then handled by the server and client(s).

Now that we know the workflow, let's see them in action in the example section next.

Todo List Example

Yes, one more to-do list example.

The spin with this one is that we will use gRPC to create and read our todo's both using Unary mode and Server Streaming mode.

So to break things down, we will have two applications, client and server. The client will call the different methods that the server provides.

PS. The language of both the server and the client will be JavaScript. So if you hate JavaScript, you are out of luck.

Prerequisites

- Node.

- Yarn or NPM.

- Basic Knowledge of JavaScript.

Initializing Project

First of all, let's create a directory for our project and initialize yarn.

mkdir javascript-grpc-demo

cd javascript-grpc-demo

yarn initFor this project we need two packages, so let's install them.

yarn add grpc @grpc/proto-loaderBuilding our Proto Schema

To begin, let's define the communication between our client and server.

To do that, let's create a todo.proto file.

touch todo.protoA protobuf file has two main components:

- Services – This is our RPC interface, here we define the different methods that we provide our clients. So in our case, it would be

createTodos,readTodos, andreadTodosStream. We also define the arguments that the method accepts and the response it returns. - Messages – Messages are our abstract data structures. In our case, we will have three messages,

voidNoParam,TodoItem, andTodoItems.

Here's the code of our todo.proto file.

syntax = "proto3"

package todoPackage;

service Todo {

rpc createTodo(TodoItem) returns (TodoItem);

rpc readTodos(voidNoParam) returns (TodoItems);

rpc readTodosStream(voidNoParam) returns (stream TodoItem)

}

message voidNoParam {}

message TodoItem {

int32 id = 1;

string text = 2;

}

message TodoItems {

repeated TodoItem items = 1;

}Building our Server

Next, let's build the actual functionality.

So first of all, let's create a server.js file.

touch server.js For now, just copy this piece of code to our server.js.

const grpc = require("grpc")

const protoLoader = require("@grpc/proto-loader")

const packageDef = protoLoader.loadSync("todo.proto", {})

const grpcObject = grpc.loadPackageDefiniton(packageDef)

const todoPackage = grpcObject.todoPackage

const server = new grpc.Server()

server.bind("0.0.0.0:40000", grpc.ServerCredentials.createInsecure())

server.addService(todoPackage.Todo.service,

{

"createTodo": createTodo,

"readTodos": readTodos,

"readTodosStream": readTodosStream

})

server.start()

const todos = []

function createTodo (call, callback) {

const todoItem = {

"id": todos.length + 1,

"text": call.request.text

}

}

function readTodosStream(call, callback) {

todos.forEach(t => call.write(t))

call.end()

}

function readTodos(call, callback){

callback(null, {"items": todos})

}Let's break this file down:

- Line 1-5 – Here we load in our dependencies and proto file. We then create a gRPC object from our proto file and fetch the

todoPackage. - Line 7-8 – We initialize a gRPC server bound to our localhost with port 40000. Another note is that gRPC runs under HTTP 2 and HTTP 2 requires credentials, so for now we create an insecure instance using the

grpc.ServerCredentials.createInsecure()method. - Line 10-15 – We have to tell our gRPC server that we have a service named

Todowhich is defined in our Protobuf file. We do that using theaddServicemethod and bind each of our RPC methods to actual JavaScript methods. - Line 21-25 – Here we define our actual methods that get run. The gRPC methods always take in two parameters, call which is the whole TCP request and callback which you can use to send the response back to the client. In terms of RESTful APIs, you can think of these as request and response.

Building the Client

Now that our server is ready, let's build the client.

As usual, let's create a file called client.js.

touch client.jsAdd this code to client.js.

const grpc = require("grpc");

const protoLoader = require("@grpc/proto-loader")

const packageDef = protoLoader.loadSync("todo.proto", {});

const grpcObject = grpc.loadPackageDefinition(packageDef);

const todoPackage = grpcObject.todoPackage;

const text = process.argv[2];

const client = new todoPackage.Todo("localhost:40000",

grpc.credentials.createInsecure())

client.createTodo({

"id": -1,

"text": text

}, (err, response) => {

console.log("Recieved from server " + JSON.stringify(response))

})

/*

client.readTodos(null, (err, response) => {

console.log("read the todos from server " + JSON.stringify(response))

if (!response.items)

response.items.forEach(a=>console.log(a.text));

})

*/

const call = client.readTodosStream();

call.on("data", item => {

console.log("received item from server " + JSON.stringify(item))

})

call.on("end", e => console.log("server done!"))Let's break down this file:

- Line 1-5 – As with the

server.js, we need the same imports and access to ourtodoPackage. - Line 7-9 – We take the text from the argument values and connect to our server with the help of our

todoPackage. - Line 11-16 – We create our first call to create a to-do item and we log the response.

- Line 21-27 – We call the method

readTodosto fetch all the todos at once and then we log them into the console. This is currently commented because we use thereadTodosStreaminstead. - Line 29-34 – We open a connection to the server for the

readTodosStreammethod and we have an event handler so that whenever we receive data, we log it to the console. Finally, when the call has nothing to send, the connection ends and we log, "server done!".

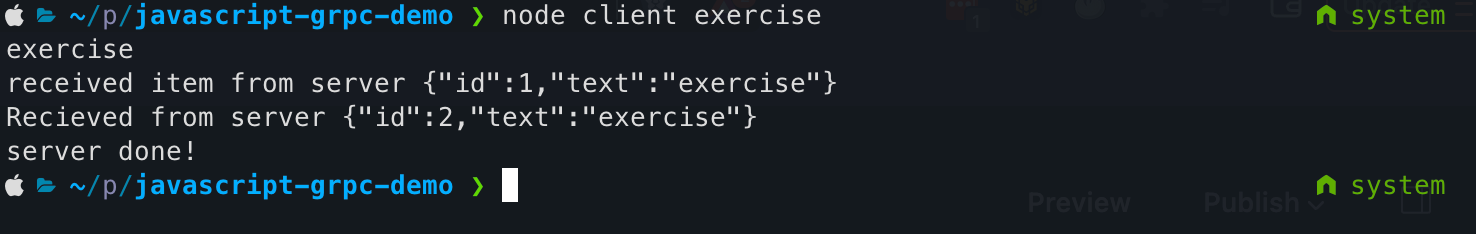

Testing Things Out

Now that we have all our components ready.

Make sure that your server is running.

node server.jsClient in Action

Let's try creating a to-do item to exercise.

node client.js exercise

If it succeeded, you should see a response like this with the stream logging too.

Congratulations, we got something basic working.

I know it's simple but I hope you learned the main concepts of gRPC so you can build more complex stuff later on.

gRPC Use Cases

You would want to use gRPC if you have any of the following requirements.

- Real-time communication services where you deal with streaming calls

- When efficient communication is a goal

- In multi-language environments

- For internal APIs where you don’t have to force technology choices on clients

- New builds as part of transforming the existing RPC API might not be worth it

So example use cases would include.

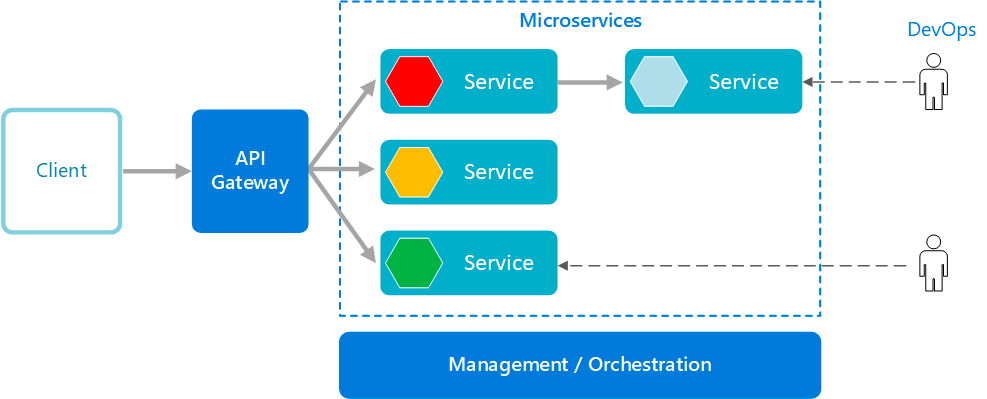

- Microservices – gRPC is designed for low latency and high throughput communication. gRPC is great for lightweight microservices where efficiency is critical.

- Point-to-point real-time communication – gRPC has excellent support for bi-directional streaming. gRPC services can push messages in real-time without polling.

- Polyglot environments – gRPC tooling supports all popular development languages, making gRPC a good choice for multi-language environments.

- Network constrained environments – gRPC messages are serialized with Protobuf, a lightweight message format. A gRPC message is always smaller than an equivalent JSON message.

- Inter-process communication (IPC) – IPC transports such as Unix domain sockets and named pipes can be used with gRPC to communicate between apps on the same machine.

gRPC Strengths and Weaknesses

Strengths of gRPC

Performance

gRPC uses HTTP 2 which takes advantage of multiplexing, which means that you can send multiple requests with one TCP connection. It also uses Protobuf which is much more compact than JSON and XML. So the combination of HTTP 2 and Protobufs makes gRPC very fast.

Streaming

HTTP 2 provides the ability have real-time communication streams. gRPC takes advantage of that and provides first class support for streaming.

gRPC supports multiple streaming semantics, as mentioned above the three streaming modes are:

- Client Streaming

- Server Streaming

- Bidirectional Streaming

One Client Library

This is essentially why gRPC was created, to combat other protocols that had a non-unified client library. So for most popular programming languages will have a very similar gRPC library that is maintained by Google themselves.

This is also propagated by code generation. So using a .proto file, which defines the contract of gRPC services and messages. From this file, gRPC frameworks generate a service base class, messages, and a complete client.

Strict Specifications

REST APIs don't have a formal specification, everyone has their own set of best practices and it sometimes conflict with each other.

One the other hand, gRPC has a formal definition that eliminates debate and saves developers time because gRPC is more consistent across platforms and implementations.

Deadlines/Timeouts and Cancellation

HTTP 2 allows you the client to cancel requests because each connection has a stream ID and gRPC simply uses that to specify if they want to cancel a connection or not.

You can go one step further and configure how long a client is willing to wait for an RPC to complete. This deadline is later sent to the server, and the server can decide what action to take if it exceeds the deadline. For example, the server might cancel in-progress database requests on timeout.

This is all done to enforce resource usage limits.

Security

This is not so much a benefit of gRPC but more of the use of HTTP 2 with TLS, which gives you end to end encryption.

Weaknesses of gRPC

Browser Support

Since gRPC heavily relies on HTTP 2, it's impossible to directly call a gRPC service from a browser today. This is because no browser provides the control needed over web requests to support a gRPC client.

To make it work in a browser you will need a proxy layer that will perform conversions between HTTP 1.1 and HTTP 2.

Not Human Readable

This is more of a weakness of Protobufs, because they are in binary format, it essentially is not readable.

But there are tools that help with this such gRPC command line tool (CLI). Also Protobuf messages support conversion to and from JSON. The built-in JSON conversion provides an efficient way to convert Protobuf messages to and from human readable form when debugging.

Limited Caching Support

Normally HTTP supports mediators for edge caching, but gRPC calls use the POST method which is a threat to API-security. These responses can't be cached through intermediaries.

Moreover, the gRPC specification doesn’t make any provisions and even indicates the wish for cache semantics between server and client.

Learning Curve

gRPC is very different than traditional APIs so it takes time to get used to. Many teams find it challenging to get familiar with Protobuf and tools to deal with the limitations of HTTP 2.

This is why users prefer to rely on REST for as long as possible.

Maturity

This is quite debatable but is brought up by many people. A lot of people think that it's too early to heavily rely on gRPC because it's still a "new" technology. I don't want to comment much on this because it's very opinionated.

Recommended Resources

If your all excited and can't wait to start learning gRPC then let me share with you a basic starter pack.

Official Guides

Community

Tools

Courses

If you want more cool resources on gRPC, feel free to checkout this git repository.

Conclusion

That's it folks, I hope you learned a lot. I just want to end it by saying that gRPC is just a tool, so it's not applicable to all projects. I don't want you guys using it because it's a shiny new thing.

Please, before picking a technology, think if it's beneficial for your use case.

Today we learned:

- Core concepts of gRPC and why it came to exist.

- Core concepts of Protobufs and why does gRPC use it.

- Basic code example that uses gRPC.

- Use cases to use and not use gRPC and alternatives.

- Strengths and weaknesses of gRPC.

If you got the extra time, I'm wondering how many of you actually use RPC in your day job?

If so, can you leave your experience in the comments below or on Twitter @tamerlan_dev.

Thanks for reading.

References

No spam, no sharing to third party. Only you and me.

Member discussion