Load Balancing 101: How It Works and Why It Matters for Your Platform

Starting this year, I felt out of control of my life.

Things were moving but it all felt a bit too much.

I had no balance.

I was trying to focus on 100 different things at once.

It was only when I narrowed my focus on a few core things in my life that I got everything back in order.

Computer systems are somewhat similar, if you stress them a lot then they will degrade.

But stress is bound to happen when you have a lot of users

So what's the solution?

The answer is load balancing.

What Is Load Balancing?

Load balancing is the process of distributing network traffic over a set of resources to improve the efficiency of the application.

So for example, let's say we have an application that provides video hosting services.

It has only one server and is located in the US.

The application became popular globally and it started to get traffic from all over the world.

Unfortunately, our application started to degrade, requests are taking longer to process and users are complaining.

One way to implement load balancing is to have 2 servers for the application, one in Europe and another in the US.

This improves the application performance because all European users will be routed to the European server while all American users will be routed to the US server.

In a nutshell, we divide the network traffic between 2 servers making them handle less load which makes our application more efficient and our users happy.

Benefits of Load Balancing

Apart from obvious performance benefits, let's look at other benefits of load balancing:

- Scalability – Load balancing allows you to scale horizontally, meaning that instead of getting more powerful servers, you can get more servers.

- High availability – As said above, load balancing allows us to vertically scale which means we have multiple servers. If one server fails then the load balancer will detect that and traffic can be redirected to other working servers.

- Better resource utilization – Load balancing helps to optimize resource utilization by distributing traffic evenly across multiple servers or resources. This ensures that each server or resource is used efficiently, helping to reduce costs and maximize performance.

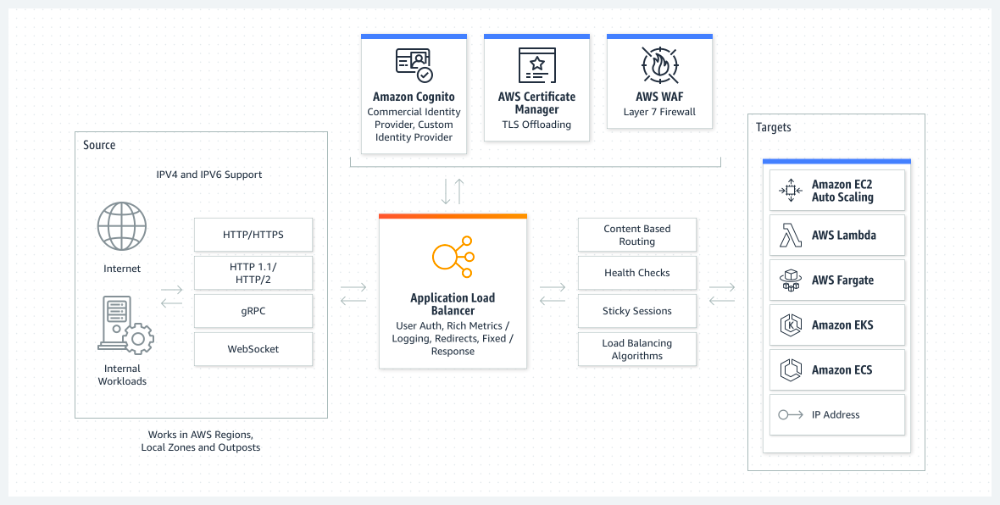

Types of Load Balancing

Load balancing is done for network traffic.

So we can classify load balancers based on what it checks to redirect traffic:

- Network Load Balancing – Checks IP addresses and other network information to redirect traffic optimally.

- Application Load Balancing – Checks the client's request content, such as HTTP headers or SSL session IDs.

- Global Server Load Balancing – Checks the geolocation of the request and routes traffic to the closest server.

- DNS Load Balancing – This is when you configure your Domain Name System (DNS) so that different domains can map to different servers.

Load Balancing Algorithms

The load balancing algorithm is the logic that the load balancer uses to determine which of the server gets the next server request.

There are two primary approaches to load balancing:

- Dynamic Load Balancing Algorithms – Takes into account the state of each server and distributes traffic accordingly.

- Static Load Balancing Algorithms – This doesn't take into account the state of the server and distributes the amount of traffic equally.

Now that we know the types of load-balancing algorithms, let's take a look at specific algorithms for each type.

Dynamic Load Balancing Algorithms

Least Connection

Least connection algorithms checks which server has the least amount of connections open and sends traffic to those servers.

This algorithm does not care about how long a connection takes and assumes that all requests will require equal processing power.

Weighted Least Connection

Weighted Least Connection algorithm builds upon least connection algorithim but assumes some servers can handle more active connections than others.

Therefore you can assign a weight or capacity to each server.

The load balancer will then decide to send requests to the server with the least connections by capacity (e.g. if there are two servers with the lowest number of connections, the server with the highest weight is chosen).

Least Response Time

This algorithm takes into account the number of open connections in each server and the average response time.

The load balancer forwards the new request to the server with the lowest number of active connections and shortest average response time.

Resource Based

Resource-based load balancers use monitoring software called agents on each server to measure the availability of CPU and memory.

The load balancer then forwards the request to the server with the most resources available.

Static Load Balancing Algorithms

Round Robin

Round-robin load balancing is the simplest and the most commonly used load-balancing algorithm.

Requests are distributed in simple rotation. For example, if you have three servers, then the first request will be sent to the first server, the second request will be sent to the second server, the third request will be sent to the third server, and the fourth request will be sent to the first server.

You would usually have the list of servers in the Domain Name System (DNS)

Round robin is used best when each server has equal processing capabilities and the requests take equal processing power.

Weighted Round Robin

Weighted round-robin builds upon the typical round-robin algorithm but each server has different weights depending on its server capabilities.

So servers with higher weights have higher resources hence they can take in more requests.

IP Hash

IP Hash algorithm takes the client's IP address, uses a mathematical computation called hashing on it, and converts it to a number that represents a server. It will then send the request to that specific server.

This is useful because each client request will be sent to the same server.

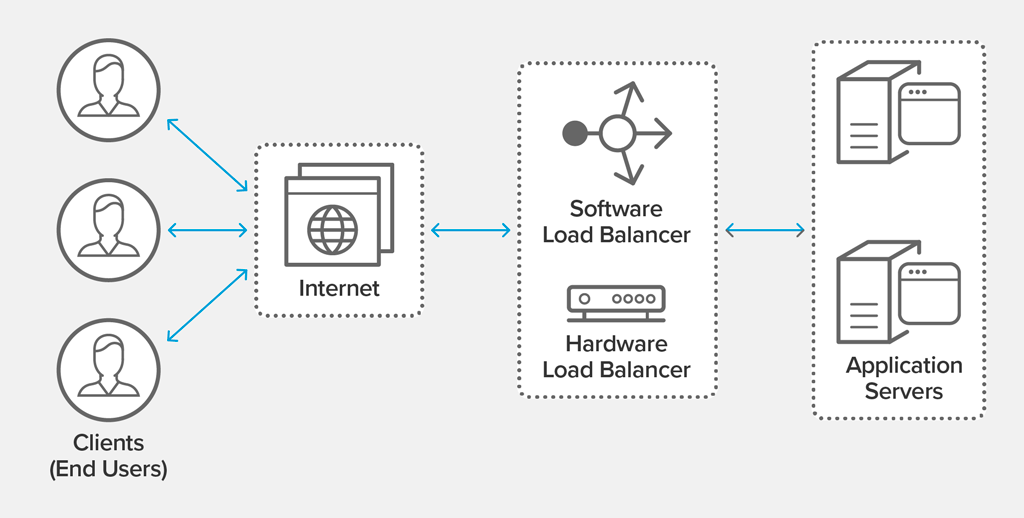

Types of Load Balancing Technologies

Load balancers are either one of two types:

- Hardware Load Balancers – A physical device that securely process and redirect gigabytes of traffic to hundreds of different servers. You usually store them in data centers and use virtualization to create multiple digital load balancers that you can centrally control.

- Software Load Balancers – An application that performs load balancing functions. It can be installed on any server or accessed as a third-party service.

Hardware load balancers cost an initial investment, configuration, and ongoing maintenance. You might not use one in its full capacity and if you do exceed capacity you have to physically install another one.

On the other hand, software load balancers are flexible. You can scale them up and down depending on traffic and are compatible with cloud computing. They are cheaper, easier to set up and use over time.

Load Balancing in Action

Let's look at how load balancers work.

Companies usually have their application running on multiple servers.

Probably in some data center.

The user will probably type in the browser www.mywebsite.com

The DNS service will route www.mywebsite.com to our load balancer.

Depending on the type of load balancer and algorithm, the load balancer will then route the request to the appropriate server.

Load Balancing Considerations

Load balancing is not always simple.

The example of having a single load balancer and multiple servers is a bit too naive.

So let's look at some considerations or challenges when it comes to load balancing.

Stateful APIs

The generic model of load balancers works well with stateless APIs.

But what does stateless mean?

Stateless means that there is no memory of the past. Every request is performed as if it were being done for the very first time.

But what about stateful APIs?

Stateful APIs need some context information about you such as login status or shopping cart.

One way to do that is with sessions.

The web server saves information about you for a certain period of time or a session.

The problem with that is that it's only saved in one server.

So if you have a load balancer with three different servers then not every server will share the same state.

One way to fix that is to have a sticky session.

So we can implement that in two ways:

- Share session data between all web servers with external storage (e.g. Redis cluster)

- Force all the requests from the same client dispatched to the same server.

The first method is a bit tricky to implement and out of the scope of this article but I've provided another article that gets into more details about that.

On the other hand, the second method is a bit easier.

We just have to have a mapping relationship between the client and the server.

There are multiple ways of doing this by checking the IP address or cookies. Many popular load-balancing solutions provide this option with different approaches.

The downside in enabling sticky sessions is that one server may have more load than other servers because a client is sending a lot of requests.

Web Sockets

Many applications require real-time communication.

Things such as chat apps, online games, or audio/video chat using WebRTC.

In the past, we used the polling strategy which is sending periodic HTTP requests for data.

This method is slow because we are creating a new TCP connection every single time.

This is why the WebSocket protocol was created.

It allows for bi-directional communication with a single connection.

This article isn't about WebSockets but if you want to know more, feel free to check out this article.

Anyways, coming back to load balancing.

If we load balance the traditional way then we would load balance the number of connections.

So we make sure that server A and server B have similar active connections.

The problem with this approach with WebSockets is that the connections stay open and we can't predict how much processing power each connection takes.

For example, if we have video chat software, users with many friends may have a higher workload than users with no friends.

This is a complicated problem and out of the scope of this article.

But if you want to learn more about scaling web sockets then check out this article.

Discuss some of the common challenges and considerations that need to be taken into account when implementing load balancings, such as SSL termination, session persistence, and health checking.

Conclusion

As Thanos said, things in balance are perfect.

I hope I taught you the importance of implementing that in your systems.

Now you might ask me, "Okay Tamer, I get it. Load balancing is important, how do I implement it?"

Well funny you ask because I just published two articles about implementing load balancing in NGINX and Kubernetes.

Thanks for reading.

No spam, no sharing to third party. Only you and me.

Member discussion